Challenges

1) Line following

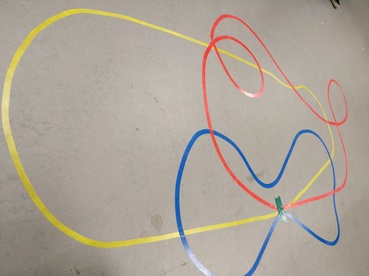

- Robot should be able to follow a colored line

- When it reaches the end of the track, it will follow the next one:

- 2021-2022 Colors to be defined

2) Go To

Robot will be put on a known initial position (for example a cross marked on the ground), we will then provide a goal position and orientation \(x, y, \theta\) (using international system units which are meters and radians).

The robot should move to reach this position/orientation.

3) Odometry

Robot will be moved (using "passive wheels" mode) on the round.

After some time, you will indicate where the robot is located relative to its initial position ($$x, y, \theta$), using only the motor encoders.

4) Drawing the map

After following the line of challenge 1, the robot will produce a skyview map (image) of the track.

Guide

Here are the methods we recommend implementing for the odometry part:

- \(direct\_kinematics(v_{gauche}, v_{droit})\) → \((\dot x, \dot \theta)\)

- Takes as parameters wheel speeds (rad/s) and returns linear (m/s) and angular (rad/s) speeds of the robot

- \(odom(\dot x, \dot \theta, dt)\) → \((dx, dy, d\theta)\)

- Takes as parameters linear and angular speed of the robot, and returns the position (m) and orientation (rad) variation in the robot frame

- \(tick\_odom(x_{n-1}, y_{n-1}, \theta_{n-1}, \dot x, \dot \theta, dt)\) → \((x_n, y_n, \theta_n)\)

- Takes as parameters the position and orientation of the robot in the world frame, the variation of the robot position and orientation in the robot frame, and returns new position and orientation of the robot in the world frame

For control:

- \(inverse\_kinematics(\dot x, \dot \theta)\) → \((v_{gauche}, v_{droit})\)

- Takes as parameters the linear and angular target speeds, and computes the speed for left and right wheels

- \(go\_to\_xya(x, y, \theta)\)

- Takes the robot to a given position in the world frame

- \(pixel\_to\_robot(x, y)\) → \(x, y, z\)

- Takes as parameter the position of a pixel in the image and computes the position of the pixel in the robot frame

- \(pixel\_to\_world(x, y)\) → \(x, y, z\)

- Same, but in the world frame