Gradient

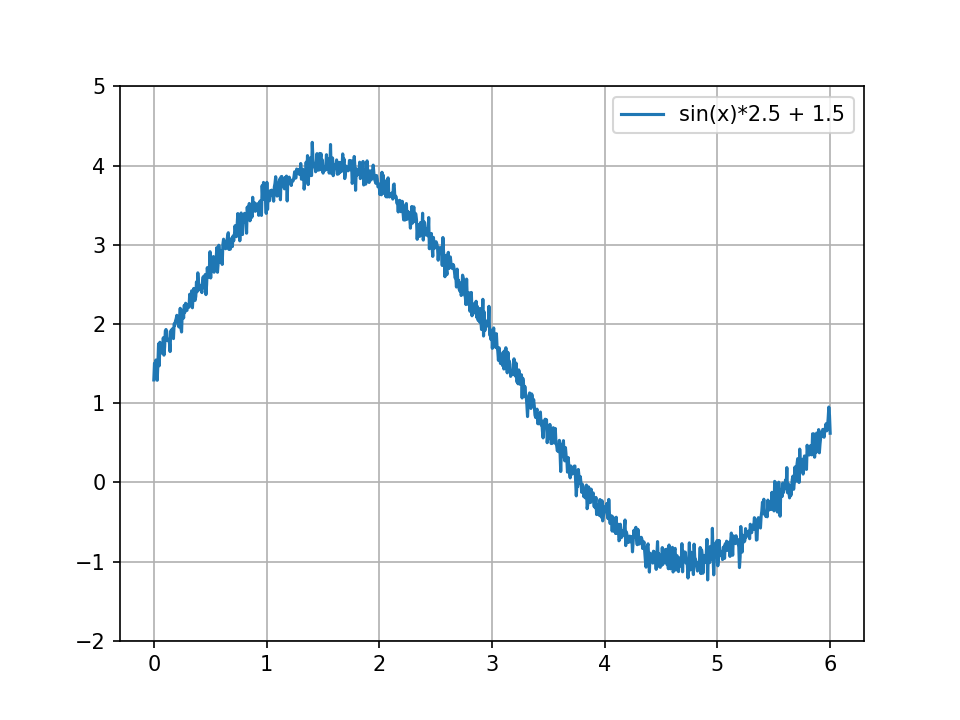

Suppose we have a function

Let's take for example a function of two variables

In that case, the linear approximation becomes:

Note: the derivatives above are taken at

Gradient

In matrix form:

We call

Computing the gradient

For example, let's take

Gradient descent

Suppose we want to minimize

Idea: start at

The gradient provides a local, linear approximation of f:

It gives the direction of the steepest ascent (or descent) of

Gradient descent

The algorithm is then:

- Select an initial guess

- Compute the gradient

- Update

- Repeat until convergence.

Gradient descent

Gradient descent

We can now find the

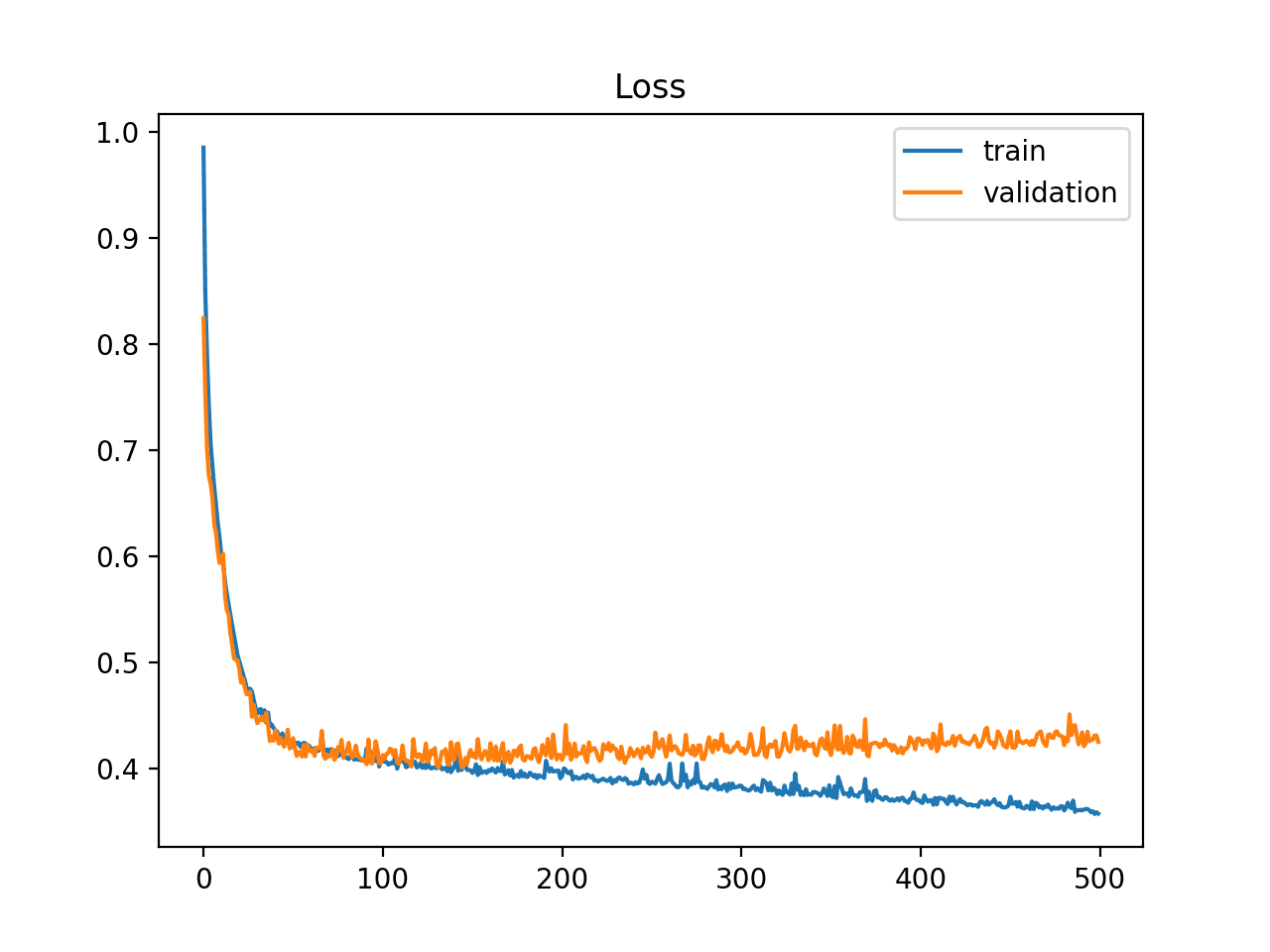

Problem remains:

- What model

- How to compute the gradient

- How to choose/adjust the learning rate

- How to tackle over/underfitting ?

Perceptron

Perceptron

A perceptron is a function modelling a neuron, with the following architecture:

Perceptron

The output of the perceptron is:

Where:

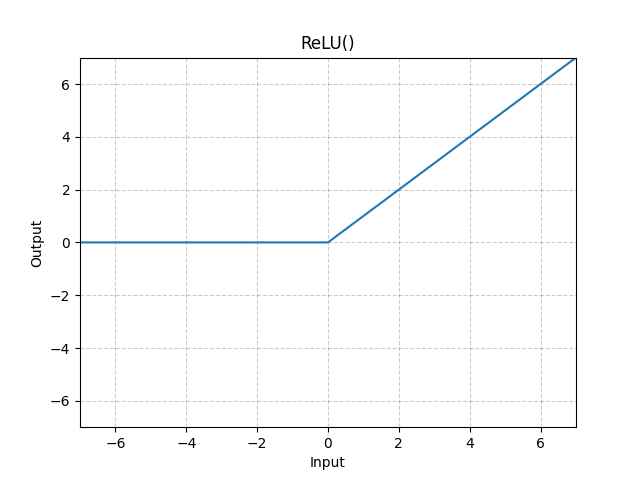

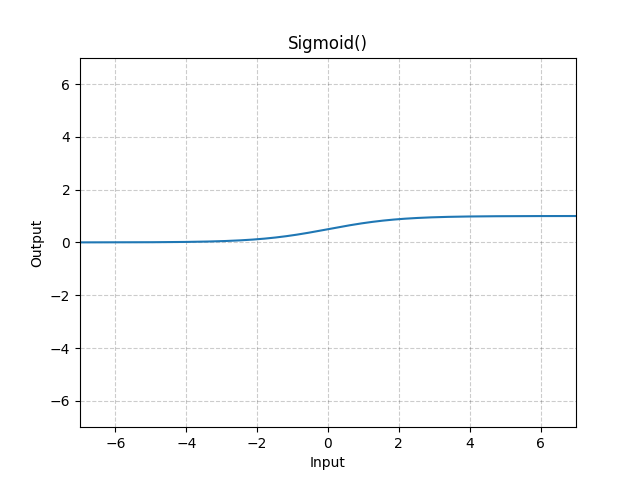

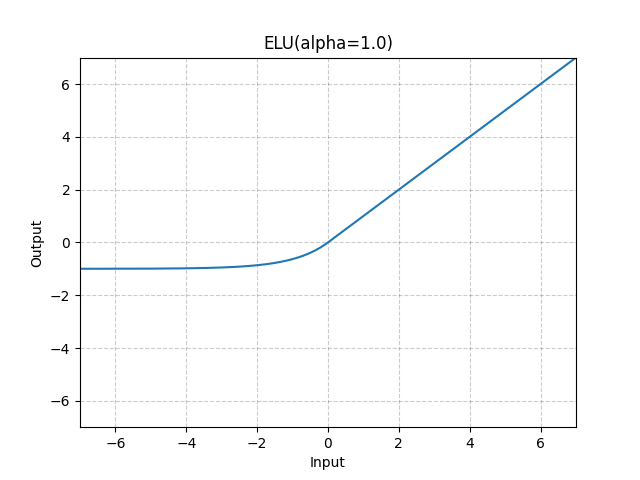

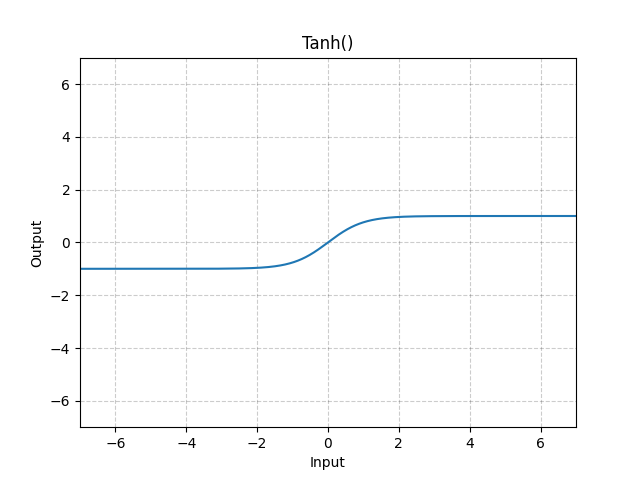

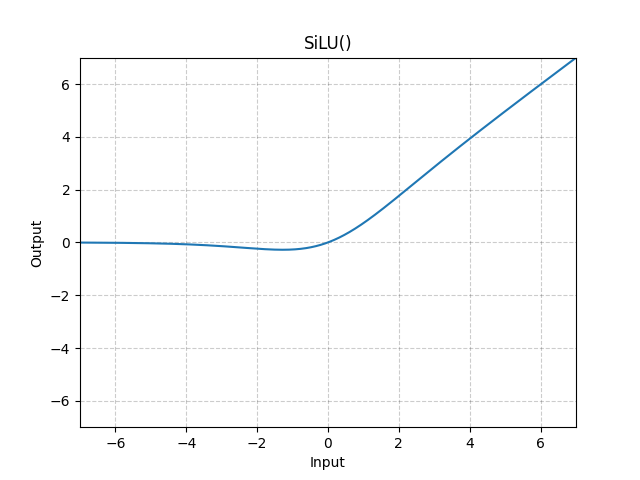

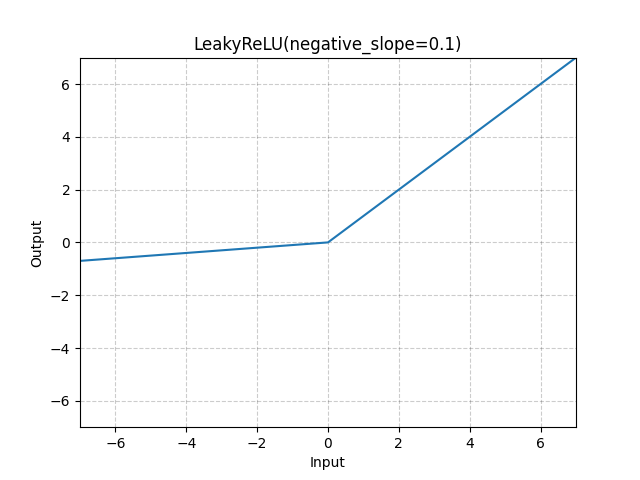

Activation functions

The goal of the activation function is to introduce non-linearity in the model.

Activation functions