Method

We first choose a model

We then decide a metric to evaluate how well the model is performing.

Finally, we search for the parameters

Outline

| Approach | Pros | Cons |

|---|---|---|

| Exact solution | Efficient | Solvable equations, overfitting |

| Least square | Efficient | Linear model, quadratic error |

| Netwon's method | Any model | Suboptimal, can diverge |

| Gradient descent | Any model | Suboptimal, slow |

Linear models

Linear models

Linear models are those of the forms:

Where

Note: the models are linear in the parameters

Examples

Examples

Exact solutions

Exact solutions

Given some dataset, we can first search for a solution that exactly fits the data.

In that case,

This approach is especially useful for interpolation.

Affine fitting

Let's start with the simplest example:

Affine fitting

First, let's define the model:

We can then write the equations:

Affine fitting

This yields the system of equations:

In matrix form:

Affine fitting

The solution is then:

Cubic fitting

We can also impose derivatives:

Cubic fitting

We have:

Then:

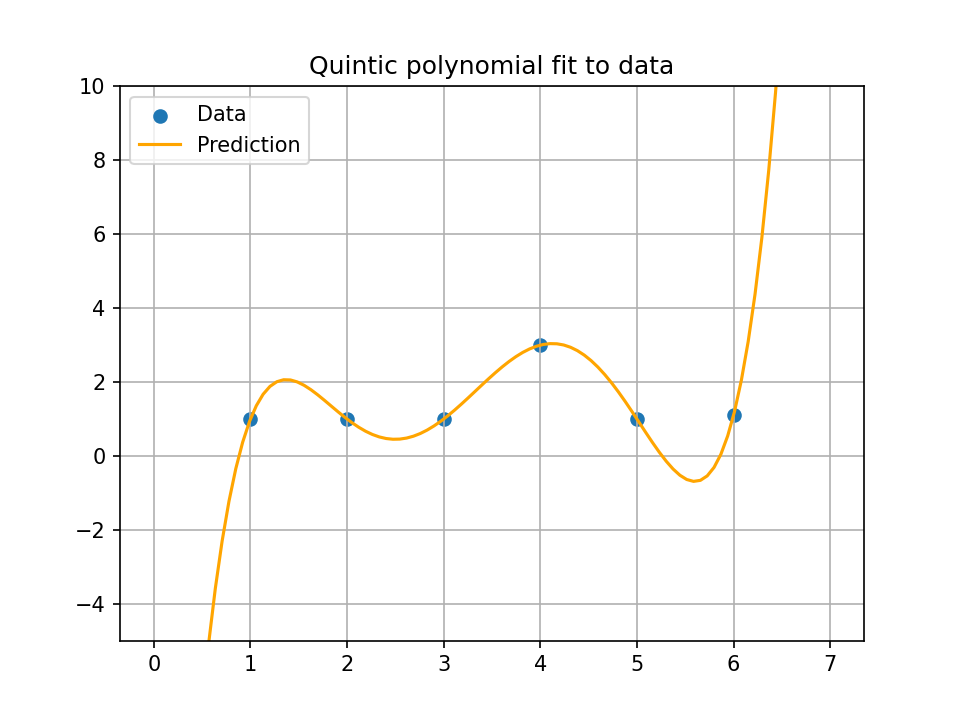

Quintic fitting

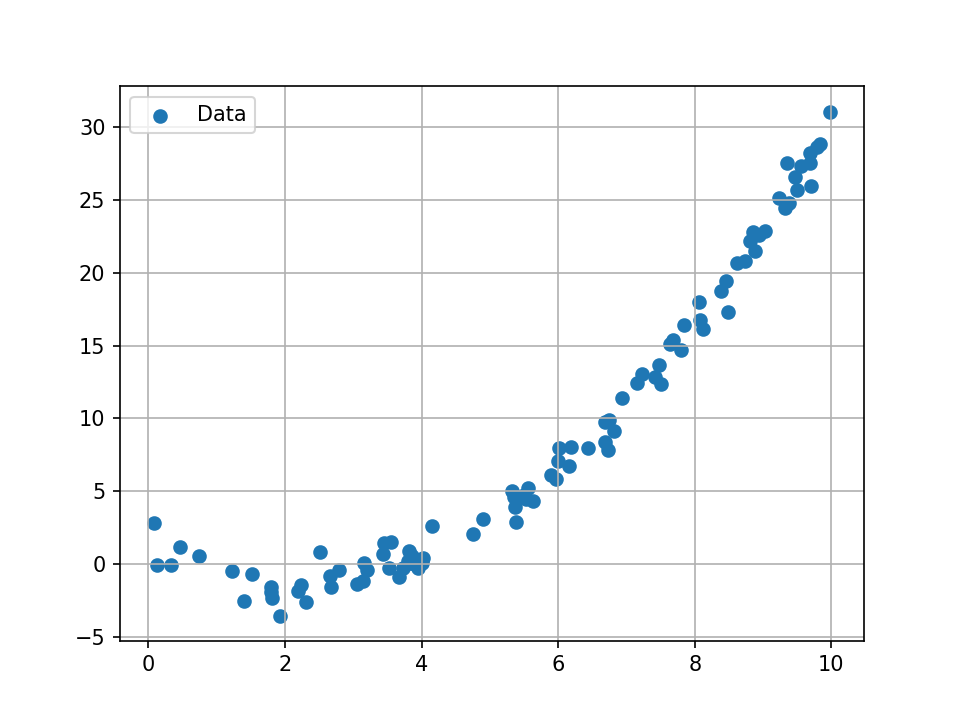

Now, what if we have 6 data points and want to find a model ?

Quintic fitting

The output will look like this:

Quintic fitting

We can notice the following:

- With exact solution, we need as much parameters as data points

- The model is overfitting the data, and will likely perform poorly on new data

Least squares

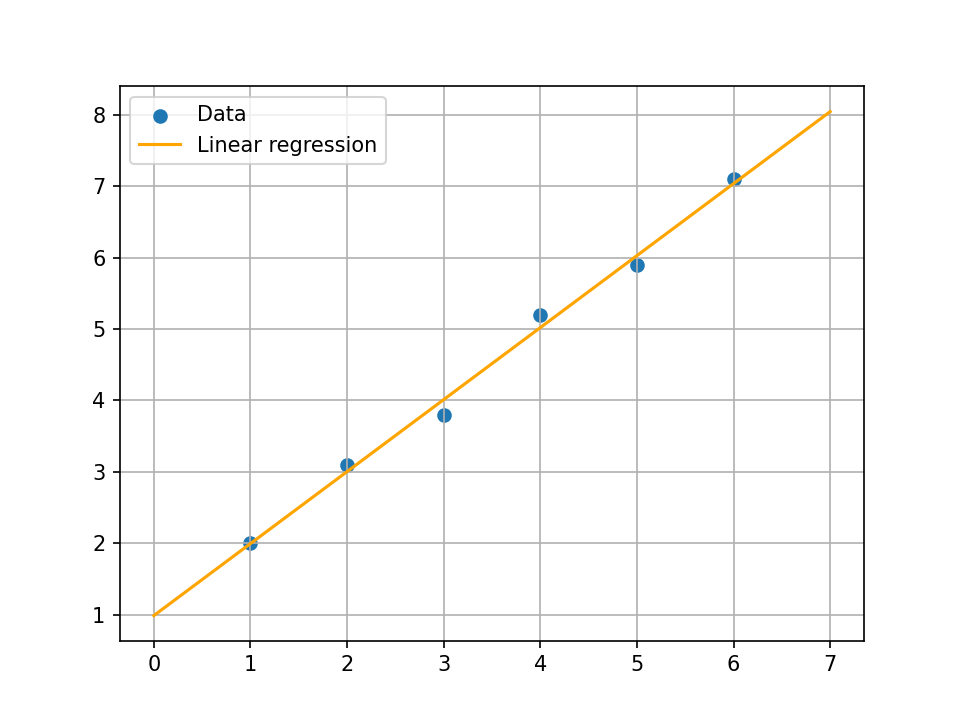

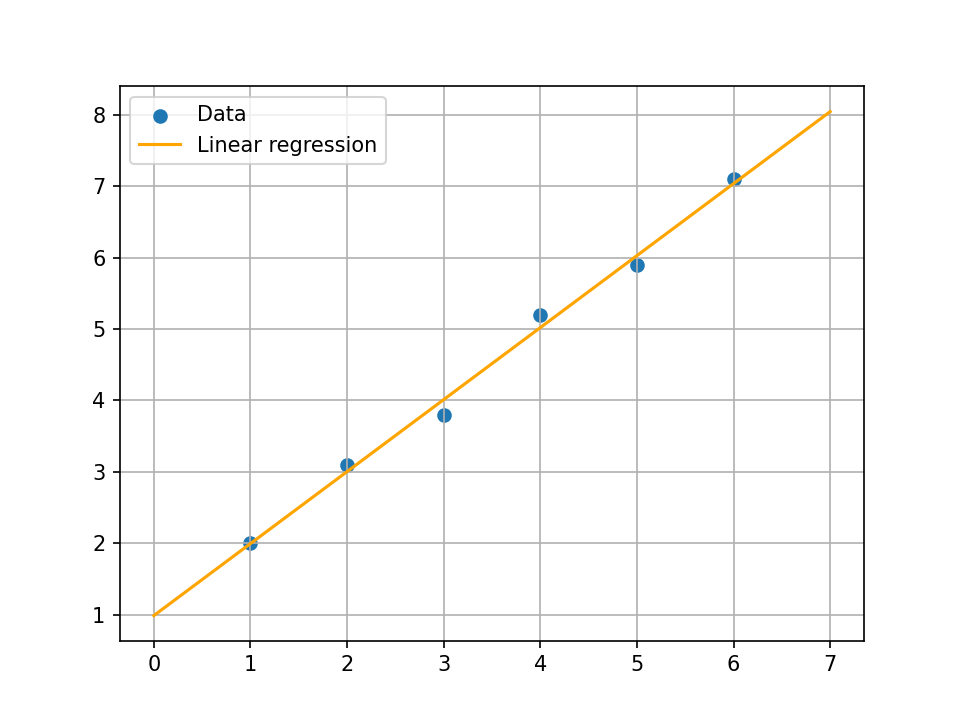

Linear regression

Suppose we have many data points and wand to find

Linear regression

If we write the system as seen before:

Problem:

This is because we only have two parameters, but many data points.

Minimizing an error

Idea: instead of finding

We will call this error

The most common error is the least squares error:

Minimizing an error

We then want to find

Least squares

We have a linear model and a square error.

Back to linear regression

Back to the linear regression problem:

Solving linear regression

First, let's write

We can expand it to:

Then, we can compute the derivatives with respect to

Solving linear regression

To cancel the derivatives, we then have:

Solving linear regression

Thus:

Substitute in the second equation:

Solving linear regression

This yields:

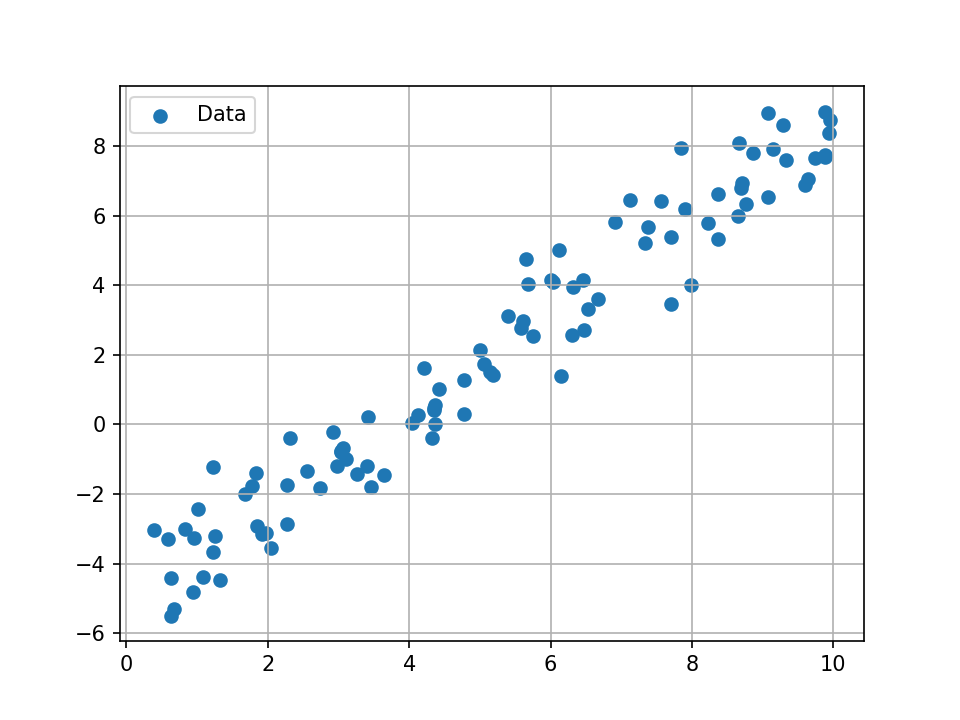

Example

We can then write some code to solve the linear regression problem:

A more general form

Remember that we can write the exact form equation as:

The loss function is then:

A more general form

This expands to:

The derivatives can then be computed as:

A more general form

Setting the derivative to zero yields:

And thus:

A more general form

The least square solution can then be obtained with the exact same approach as the exact solution.

import numpy as np

np.linalg.inv(A) # inverse

np.linalg.pinv(A) # pseudo-inverse

Linear regression with pseudo-inverse

We can then write some code using numpy.linalg.pinv to solve the linear regression problem. Yielding the same result:

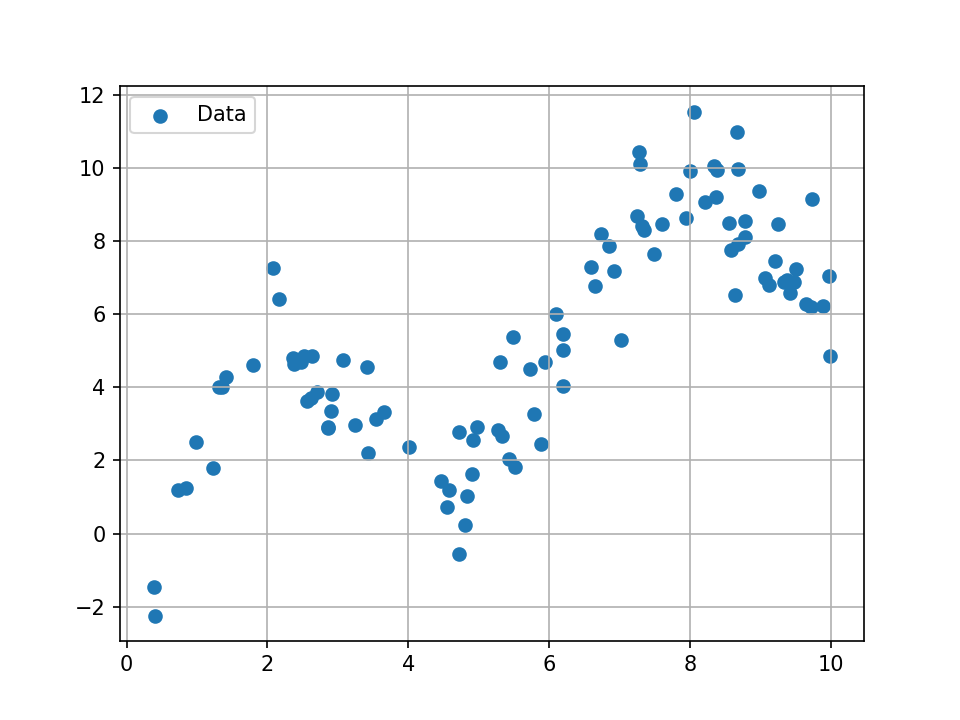

Practical exercices

Let's write some Python!

On the next slide, you will find data and models.

Hint: to load the data, you can use:

import numpy as np

data = np.loadtxt('data.csv')

Let's write some Python!

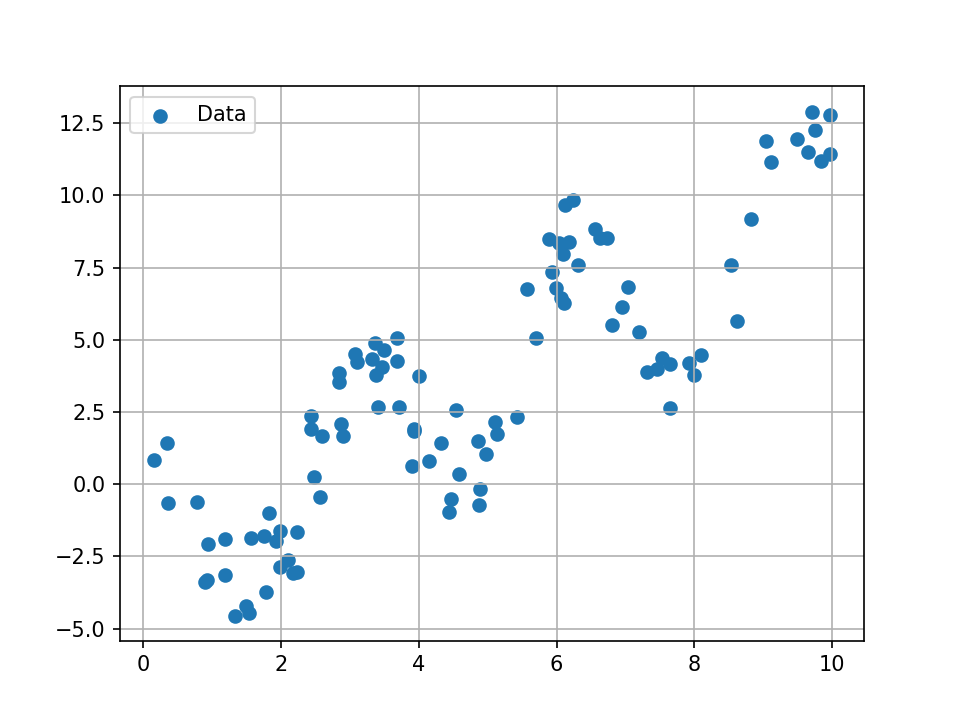

Practical case: identifying a RR robot

Let us consider the following RR robot:

Practical case: identifying a RR robot

We have:

The model is linear in the parameters

Practical case: identifying a RR robot

Here are some data generated with this script.

They contain noisy measurements of

import numpy as np

data = np.loadtxt('data/rr_data.csv')

alpha, beta, x_e, y_e = data[0] # first data